RoboMisogyny.. Yes, This is a Thing

Robo: extracted from robot and meaning robotic.

Misogyny: noun; hatred, dislike, or mistrust of women, or prejudice against women.

Bots such as Apple's Siri, Amazon’s Alexa, Microsoft’s Cortana, and Google’s Google Home all show signs of extreme submissiveness. These docile natures not only reflect the feelings of dominance among men, but also reinforce the concept that women are to be made compliant.

Siri in particular, exhibits extreme signs of submissiveness and does what she is asked to do. She never has objections, she's never busy, and she's never preoccupied doing something for herself. In short, when you call upon Siri, she gives you the equivalent of,

"Yes Master."

By and large, people tend to respond more positively to women’s voices. And the brand managers and product designers tasked with developing voices for their companies are trying to reach the largest number of customers.

The late Stanford communications professor Clifford Nass, coauthored the field’s seminal book, Wired for Speech, wrote that people tend to perceive female voices as helping us solve our problems, so we are more likely to opt for a female interface. In the short term, female voices will likely remain more commonplace, because of cultural bias.

Siri by the way, in Old Norse translates to “a beautiful woman who leads you to victory.”

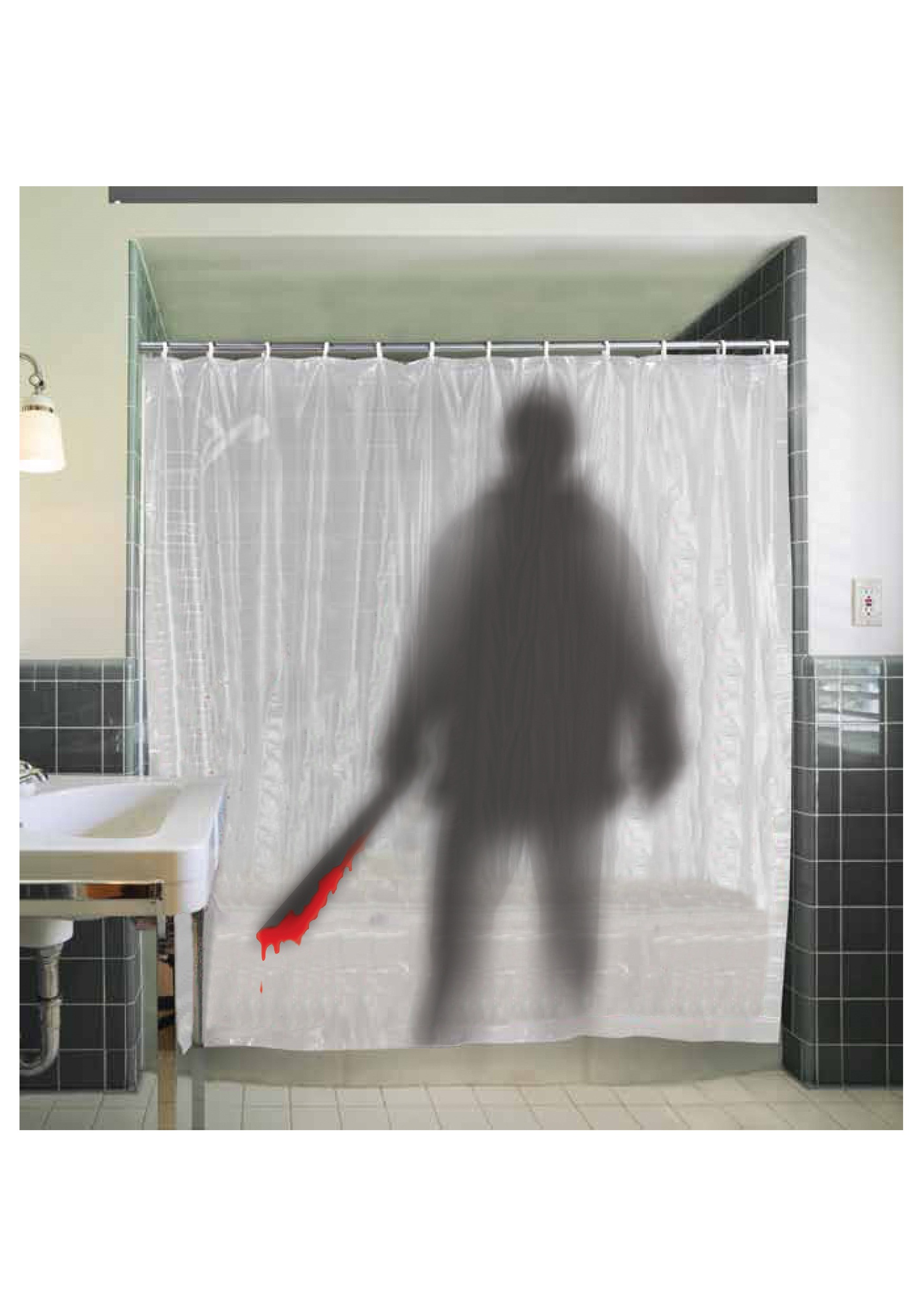

Siri behaves much like a retrograde male fantasy of the ever-compliant secretary: discreet, understanding, willing to roll with any demand a man might come up with, teasingly accepting of dirty jokes and like the very definition of female subservience, indifferent to the needs of women.

We made this discovery one night while playing with Siri’s established willingness to look up prostitutes for a man in need. When we said to Siri, “I need a blow job,” she produced “nine escorts fairly close to you”. We got the same results when we said, “I’m horny” even with a very female voice. And if you should you need erection drugs to help you through your escort encounter, Siri is super helpful. She produced twenty nearby drugstores where Viagra could be purchased, though how -- without a prescription -- is hard to imagine. We tried mouth-based words -- such as “lick” “eat” and even an alternate name for "cat" and for each one, Siri was confused and kept coming up with a name of a male friend in my contacts. Of course, one could argue that Siri knows something about him that I don’t, but that's another story.

More troubling, was Siri’s inability to generate decent results related to women’s reproductive health. When asked where to find birth control, she only came up with a clinic nearly four miles away that happened to have the words “birth control” in the name. She didn't name any of the helpful drugstores that stock condoms or birth control options.

The results when asked for abortion info were even worse. Though Planned Parenthood performs abortions (and there was one in my neighborhood), Siri claimed it had no knowledge of any abortion clinics in the area. Other women running similar trials have had the same problem if not worse. In some cases, Siri suggested crisis pregnancy centers when you mention the word “abortion." This was especially suspect seeing as how CPCs don’t provide abortions. They're established solely to lure unsuspecting women and bully them out of the choice to abort.

In response to complaints about this, Apple spokeswoman Natalie Kerris explained, “These are not intentional omissions meant to offend anyone.” We don't feel that the programmers behind Siri are out to get women. The issue is that the very real and frequent concerns of women simply didn’t rise to the level of priority for these programmers. Facts such as exponentially more women will seek abortion in their lives than men will seek prostitutes, and more women use contraception than men use Viagra. With that being said, programmers were still more concerned with making sure the word “horny” puts you in contact with an illegal prostitute, than with the word “abortion” putting you in contact with a place that could legally (and safely) perform them.

Siri is an example of how hearing a woman's voice was important, but the actual needs of women were not. Programmers clearly imagined a straight male user as their ideal and neglected to remember nearly half of iPhone users are women. That a tech company that’s the standard for progressive, innovative,and user-friendly technology couldn’t bother to care about the concerns of half the human race speaks to a sexism that’s so interwoven into the fabric of our society, it’s nearly invisible.

Kinda like when you’re asking a phone what it would take for you to get a little lovin around here.

What's your opinion? Is this a reach or is this form of sexism valid?

Is this even sexism?

Post your thoughts in the comments, we'd love to hear from you.

Thanks all.

Thanks all.

WTS.

Cuz Forbes magazine said so

Siri's submissive ass